↓ Case Study

Scroll down

Scroll down

Case Study ↓

Platform

Mobile-Native

Associates' App

Role

Product Designer

(UX Strategy, Research, Design)

Team

CEO, Process

Engineers, QA, Dev

The Problem

Our pilot was at risk — manager uploads weren’t enough to train the model in time.

The AI model needed far more training data than managers could provide through audit uploads. Without a scalable way to increase photo volume quickly, the model wouldn’t reach accuracy goals — putting the pilot and future rollout at risk.

The Ask

Increase entries to maintain timelines

The model needed significantly more photos to reach accuracy before rollout deadlines.

Maintain process identity as it's supported by SLT

We couldn’t introduce visible friction or redefine workflows already vetted by leadership.

Design for effortless adoption and scale

The new step had to feel intuitive and seamless — easy to adopt now, and easy to scale later.

What We Built

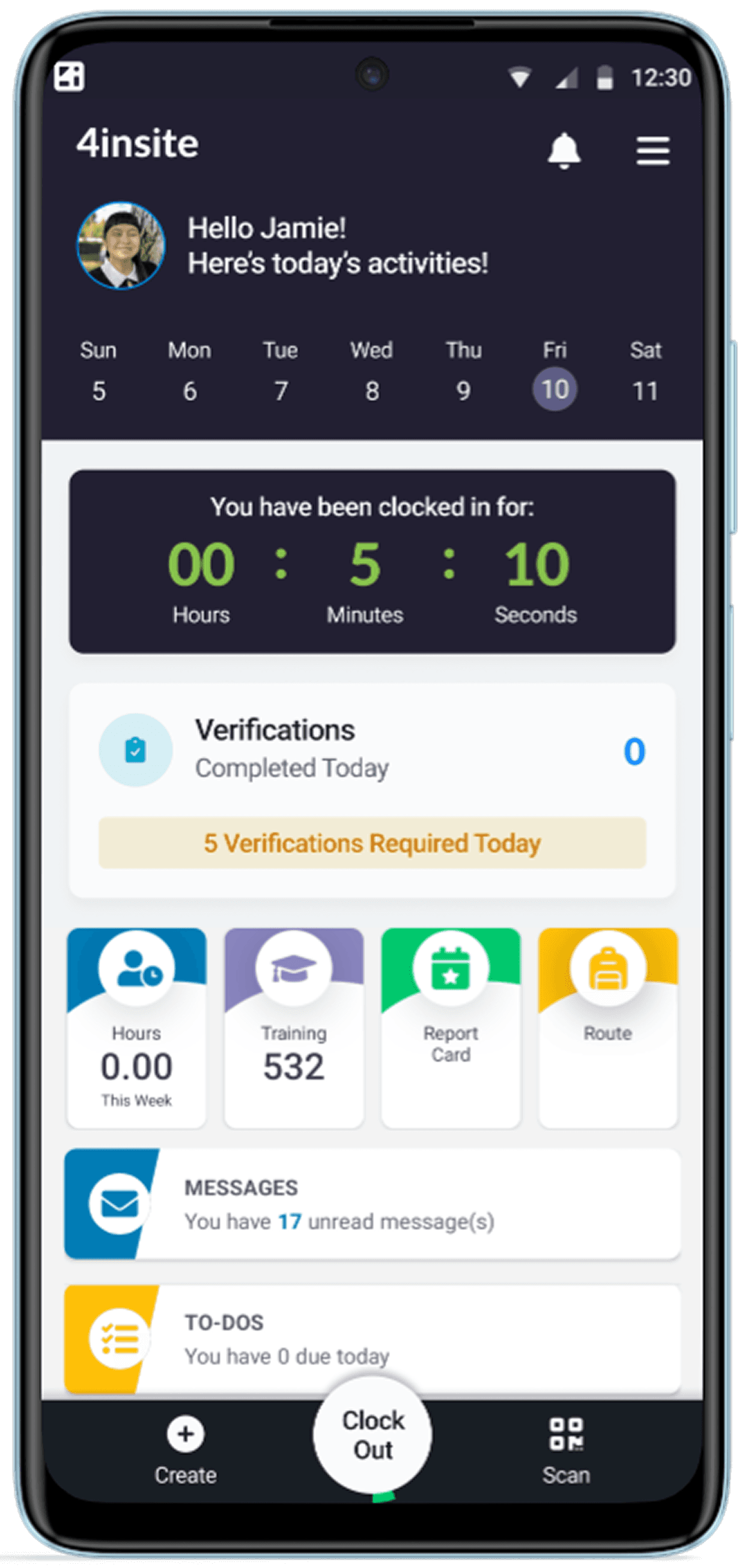

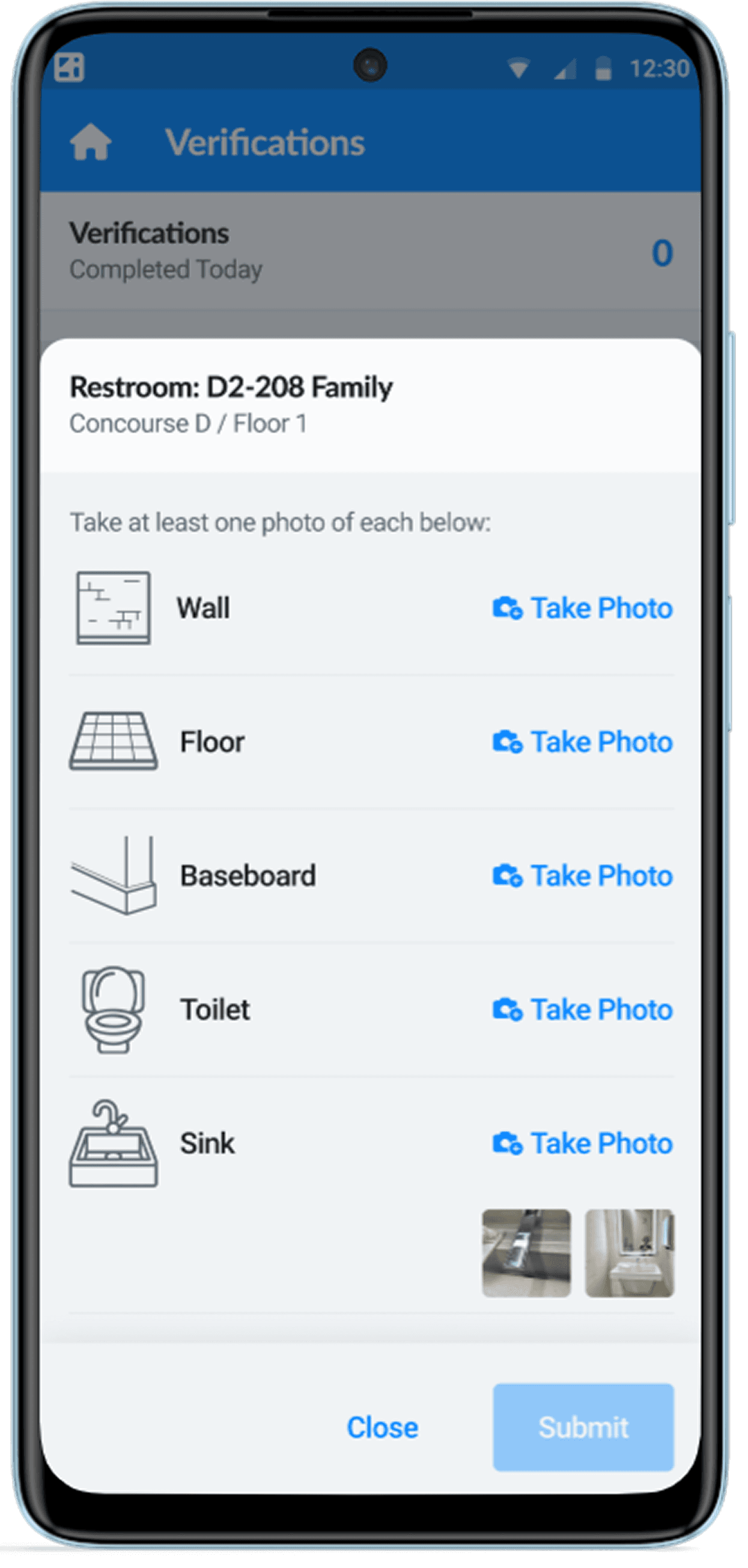

Introduced a solution through a low-friction experience

We added a lightweight verification step to the existing area list — the same tool associates already used to track their cleaning routes.

Scaled the original verification model to associates

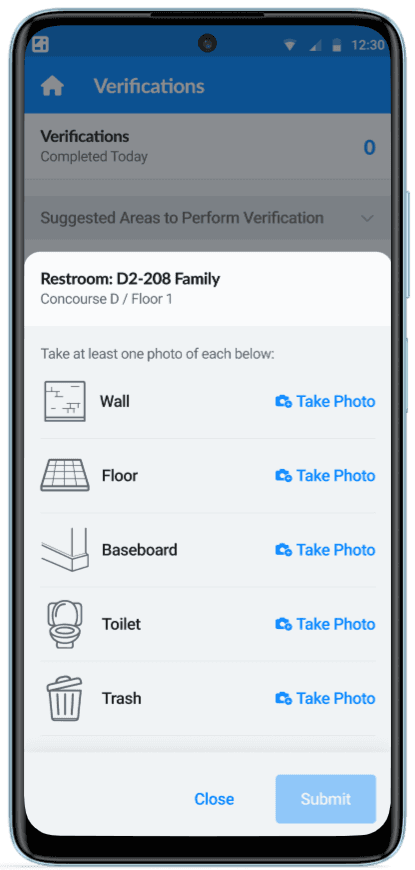

Kept original manager-led verification system intact. The same logic applied — predefined elements, required photo capture, and review when needed

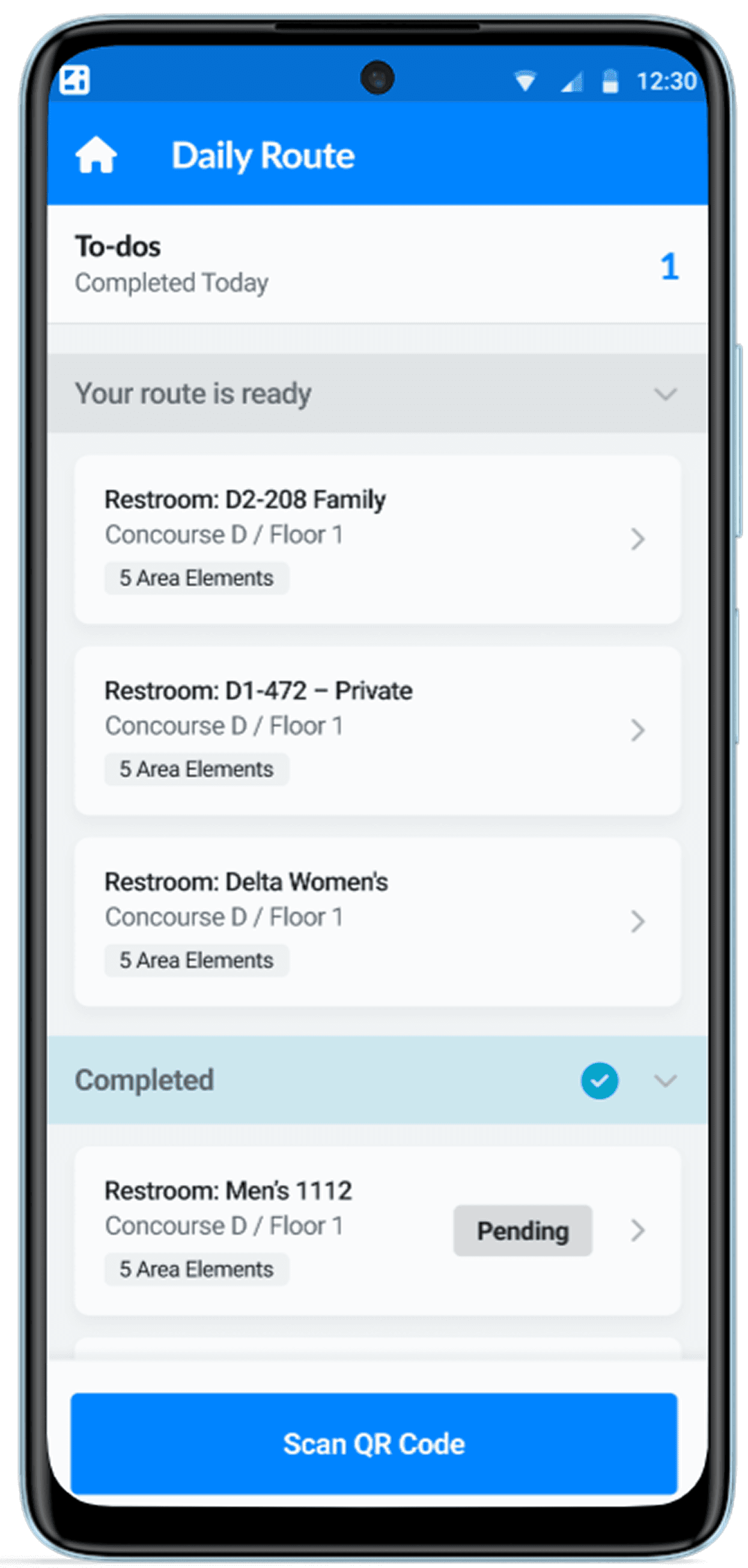

Ensured integrity by adding a confirmation by managers

Every submission was routed to managers for review before syncing to the AI model. This kept data quality high and reinforced trust

The Outcome

The new flow led to a 10x increase in photo uploads within weeks — enough to train the model on time. We shifted the manager’s role from uploader to reviewer. The pivot unblocked our AI rollout path and created a scalable pattern for future sites.

You're about to enter the realm of details. Below is the detailed process that led to the end product.

The Plan & Process

We had to explore how to grow entry volume without adding work — otherwise scalability would stay out of reach.

Before designing anything, I stepped back to understand why photo volume was low — and where a shift in behavior might naturally fit. The goal wasn’t just to build a new feature — it was to embed a smarter interaction inside routines that were already happening.

I analyzed the audit workflow and usage data to understand current upload patterns and identify the upload ceiling.

Questions I wanted to answer:

What triggers a photo entry today? Who does it, and how often?

Is the task intrinsically motivating (e.g., it helps them) or compliance-driven?

Methods:

Task-Flow Analysis

Mixpanel Boards

Then I zoomed out — using interviews to map all touchpoints where photo capture could naturally happen

Before/after task completion — to show work was done

Documenting maintenance issues — as part of the "Good Catch" system

End-of-shift reports — to capture conditions or site notes

None of these moments were designed for AI — but the behavior was there.

Lastly, I modeled the manager’s day with every possible upload to see if the numbers could actually work.

Questions I wanted to answer:

How many photos can a manager realistically upload per shift?

How much time does it take per upload, and where does it fit in their flow?

What are their competing priorities (auditing, reporting, meetings, etc.)?

If we scale sites or increase frequency, can this model grow — or does it break?

Findings & Key Insights

Research revealed we couldn’t meet model training goals without shifting who uploads

Even with perfect compliance, managers simply couldn’t generate enough photo entries to train the model within the pilot timeline. Their workflow wasn’t built for high-frequency input, and adding more tasks would have compromised their core responsibilities. This revealed a critical limitation: to scale data, we had to shift the responsibility downstream.

Key Insights

Managers' upload volume was tied to infrequent events

Photo uploads were tethered to audits — which didn’t happen often enough. The lack of frequency created a structural bottleneck, no matter how willing managers were to help.

Audits made up a small part of their day

Photo uploads only happened during audits — which accounted for about 20% of a manager’s time. The rest was spent on reporting, meetings, coordination, and fire drills.

Even full integration couldn’t overcome the role’s limits

We explored every point in the manager workflow where photo uploads could fit — audits, check-ins, post-reports — but the total output still couldn’t meet model needs.

Shaping The Solution

We shared the findings in a design workshop with SLT — and aligned on shifting verifications to associates

After surfacing the workflow limitations, I ran a working session with the design director and SLT stakeholders to present findings and explore next steps. In that session, we aligned on a strategic pivot: move the verification step from managers to associates. This would position photo collection at the point of action — right after cleaning — and transform a routine behavior into a high-frequency input stream for AI training.

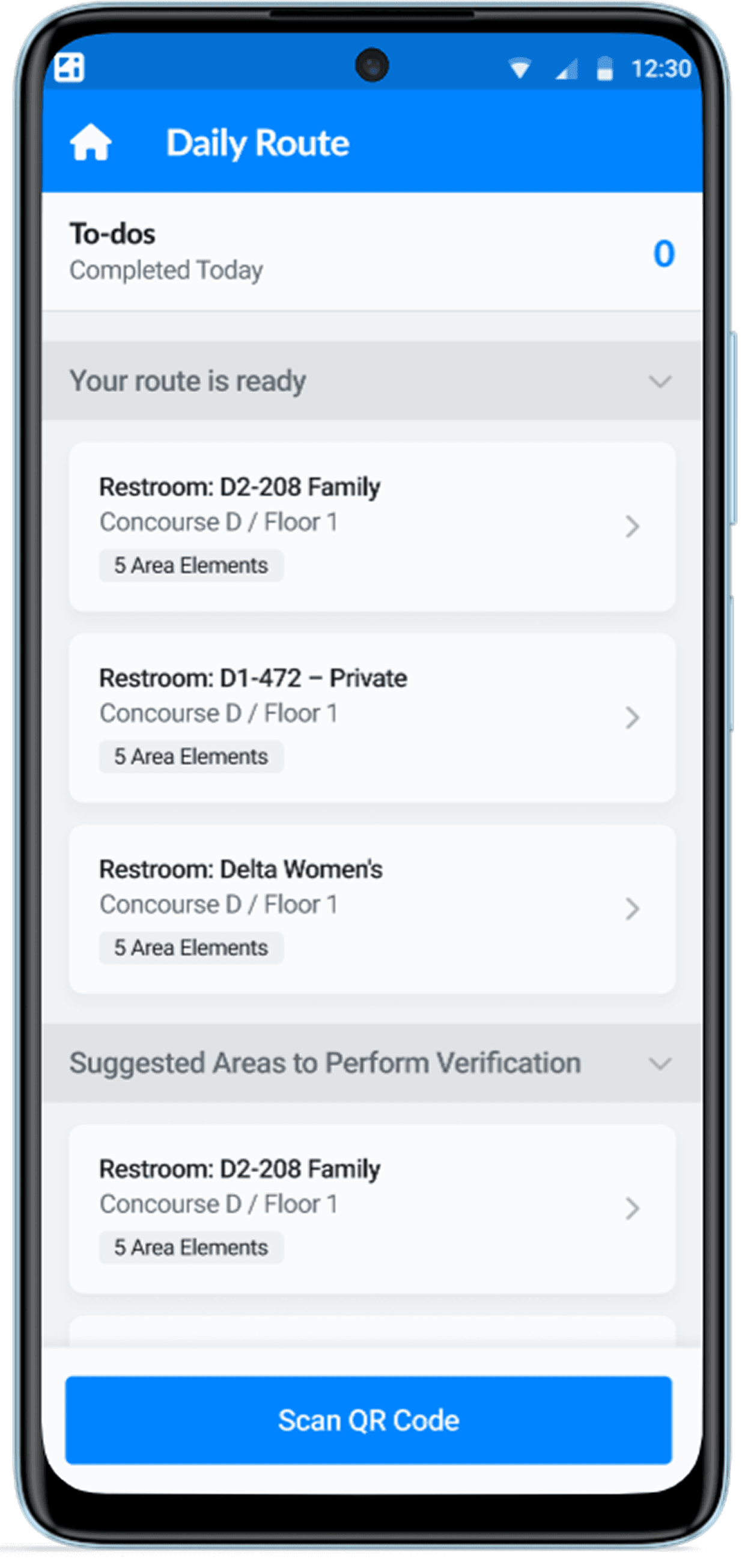

Associates already used their app to mark serviced areas — and they’d been trained to take photos

Associates were already in the habit of marking areas complete through their mobile route list. They were also trained to take photos using the “Good Catch” system, which flags maintenance issues — so capturing and submitting images wasn’t a new behavior. Based on simple volume math from manager audits, we estimated associates could easily handle 10+ verifications per shift without friction.

SLT raised concerns about losing trust if associates took over — I encountered with review layer by managers

To address leadership’s concern around quality control, I proposed adding a lightweight review step for managers. Only a subset of verifications — those flagged for issues like poor quality or duplication — would require their attention, preserving both trust and scale.

Engineering limitations blocked auto-flagging — so I introduced a manual flag to streamline manager reviews.

The team initially considered flagging low-score submissions automatically, but found that AI thresholds varied too widely between locations. Instead, we aligned on adding a manual flag option for associates — giving them a way to surface questionable cases while maintaining fairness across the system.

We aligned on using the managers' build with minor design enhancements to meet the timeline

With limited time before the pilot close, we agreed to build within the constraints of the current system. This meant reusing components already in production to avoid delays — a practical decision that kept us moving without compromising the goal.

We set a soft minimum to normalize the flow — and used bonuses to scale it

To ensure the new workflow would produce enough data, we introduced a behavioral baseline: five verifications per shift. The SLT supported using light incentives to encourage more participation without turning it into a hard requirement.

The Solution Design

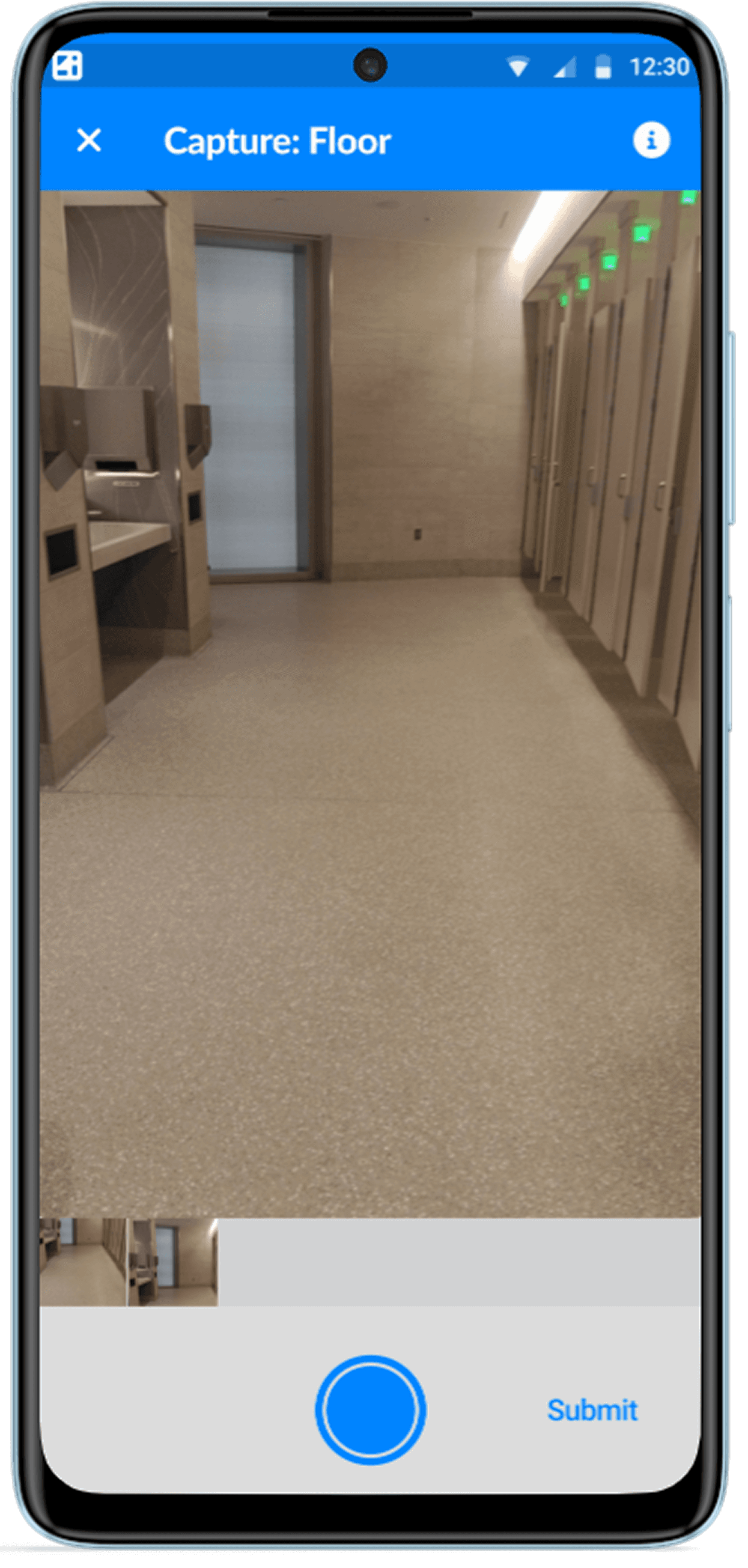

As engineers scaled the backend, I focused on designing for task clarity and confidence

I focused on making the new associate-facing flow feel approachable and frictionless. That meant designing a guided experience that worked for low-tech users — short, visual, and confirmation-based — without slowing them down or requiring training. The result was a layered system: backend complexity with frontend simplicity.

Instead of introducing a new app section or screen, verifications were embedded into the associate’s route — the same list they already used to manage cleaning tasks. This kept the flow familiar and ensured it was used in context, not as an extra step.

Then created a custom icon set to reduce cognitive load and support faster decisions

The icons weren’t just visual polish — they helped associates scan the flow quickly, understand what each element referred to, and act without second-guessing. This was especially important in shared spaces with similar-looking areas. The goal was to reduce reading effort and make the flow feel more approachable and task-based.

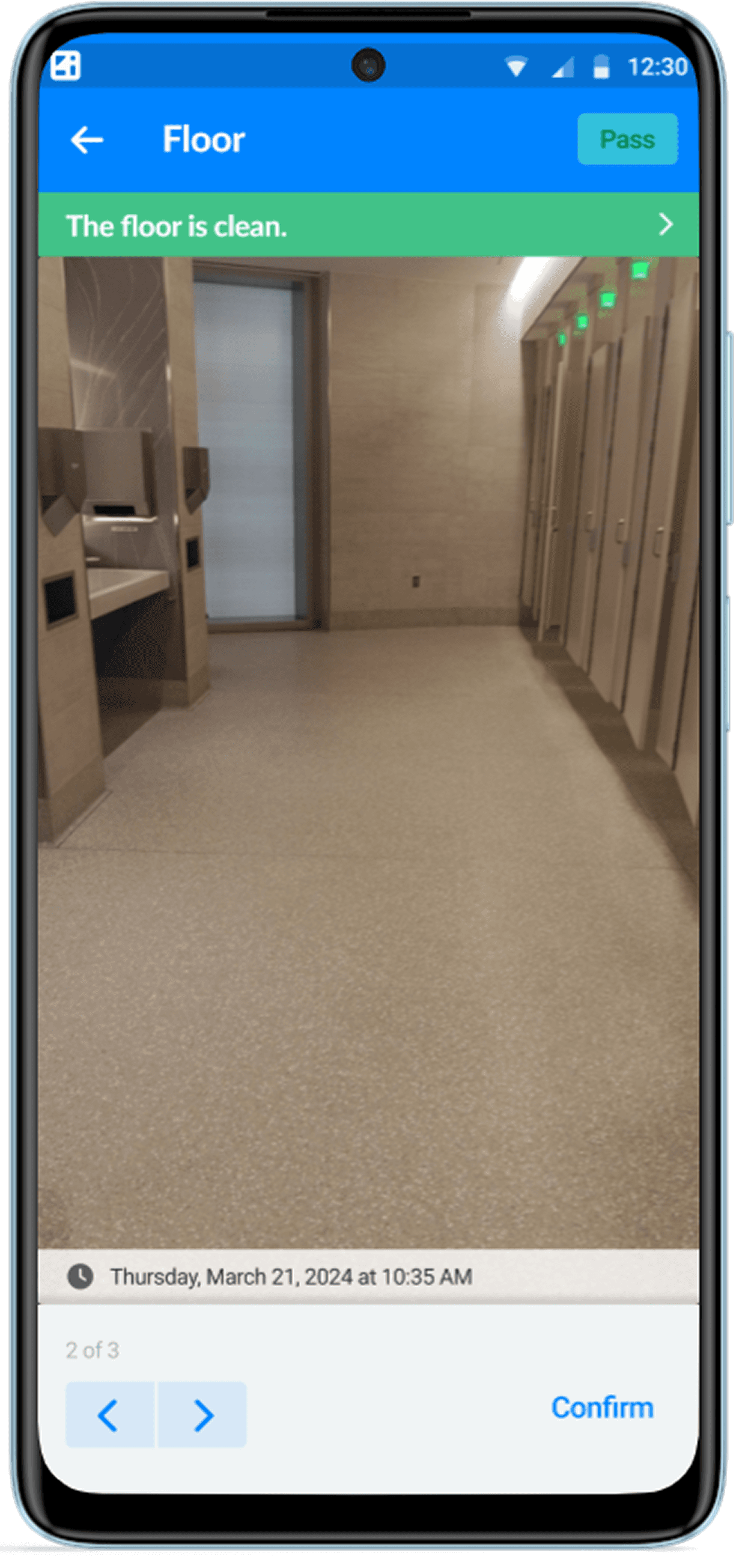

To keep verifications rapid, I designed to minimize steps — feedback had to be inline and minimal

inline feedback — so managers don’t second-guess what just happened

no modal interruption — keeps the interaction fluid, not jarring

behavioral reinforcement — helps managers learn how the system sees cleanliness over time

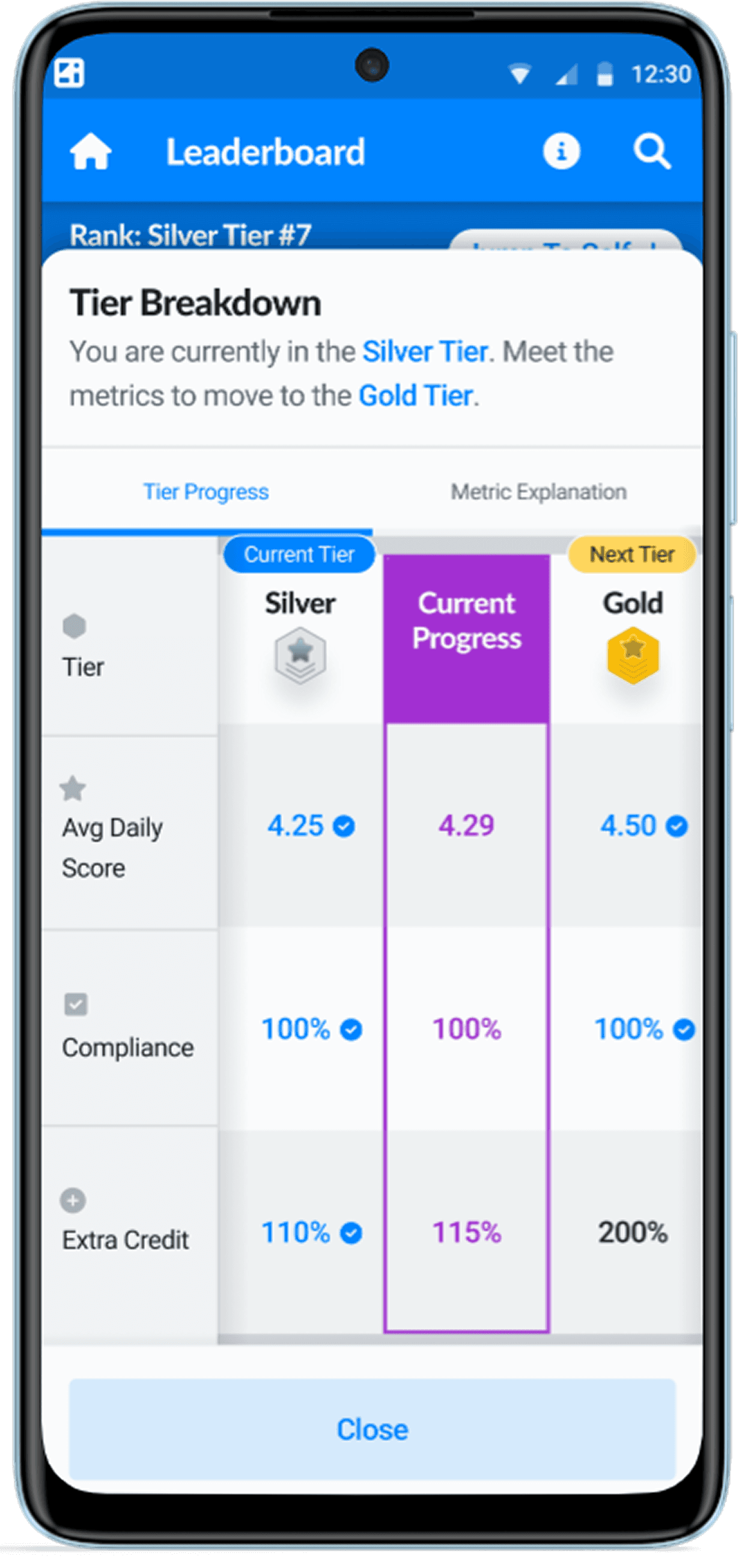

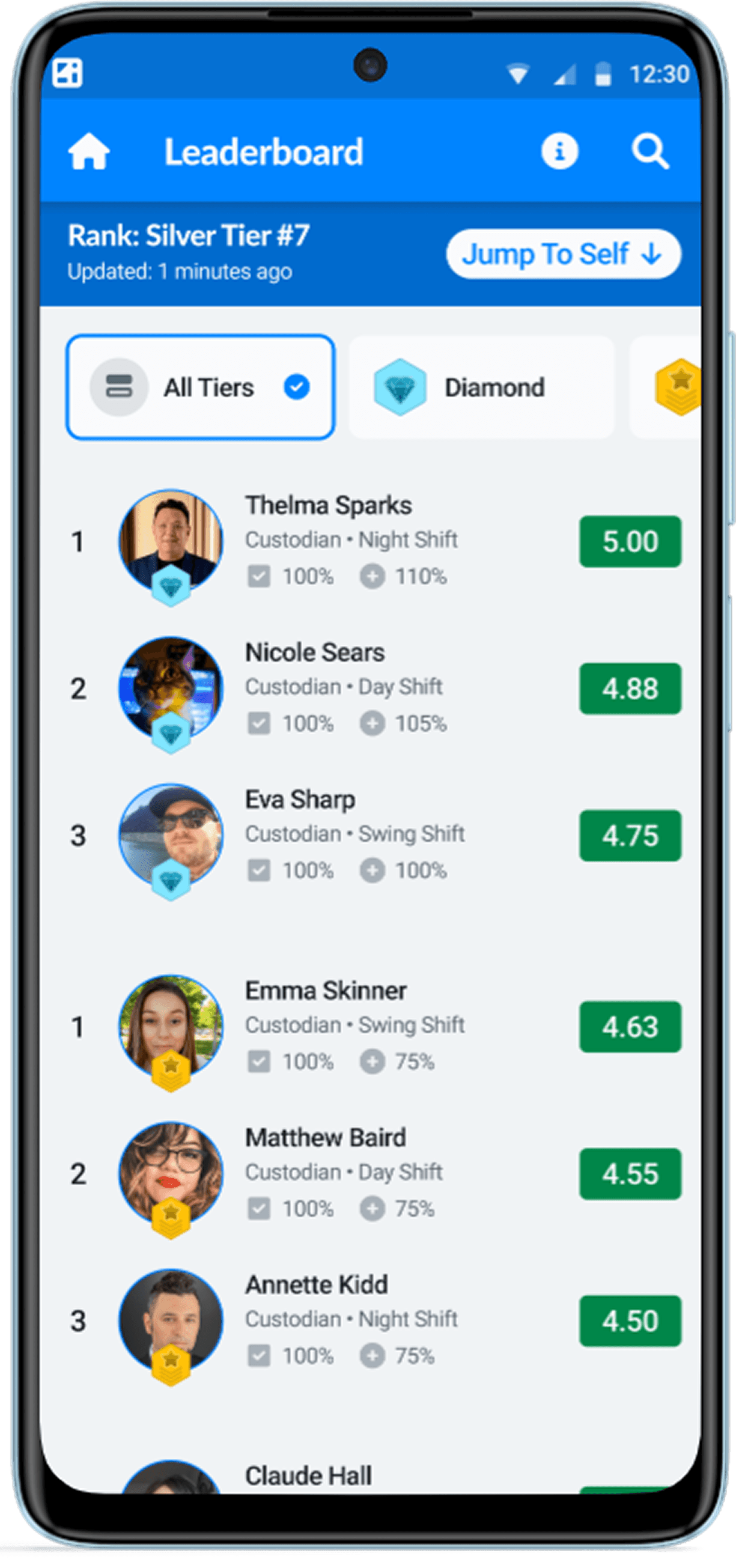

I added a three-tier leaderboard to support the bonus structure and increase adoption

The leaderboard reflected three contribution levels — minimum, above average, and top performers. This structure made it easy for associates to see their progress and understand how bonuses were earned, while keeping the system optional and inclusive. The goal was to normalize the new behavior and make adoption feel rewarding, not enforced.

The Outcome

10x more photo entries, full adoption within the pilot, and a new quality control system that enhanced site coverage beyond managers-led verifications

Within weeks, associates were submitting 10x the photo volume managers had provided — enough to train the model on time. The new flow required no training, folded into daily routines, and was adopted across all pilot users. With manager review layered in, the system maintained integrity and gave us full visibility across every cleaned area — something audits alone couldn’t deliver.