↓ Case Study

Scroll down

Scroll down

Case Study ↓

Platform

Role

Team

The Problem

OLIVAI automated quality control, driving a 10× increase in data, and reducing signal clarity. Critical signals were buried in the noise, slowing decisions and affecting performance

The Ask

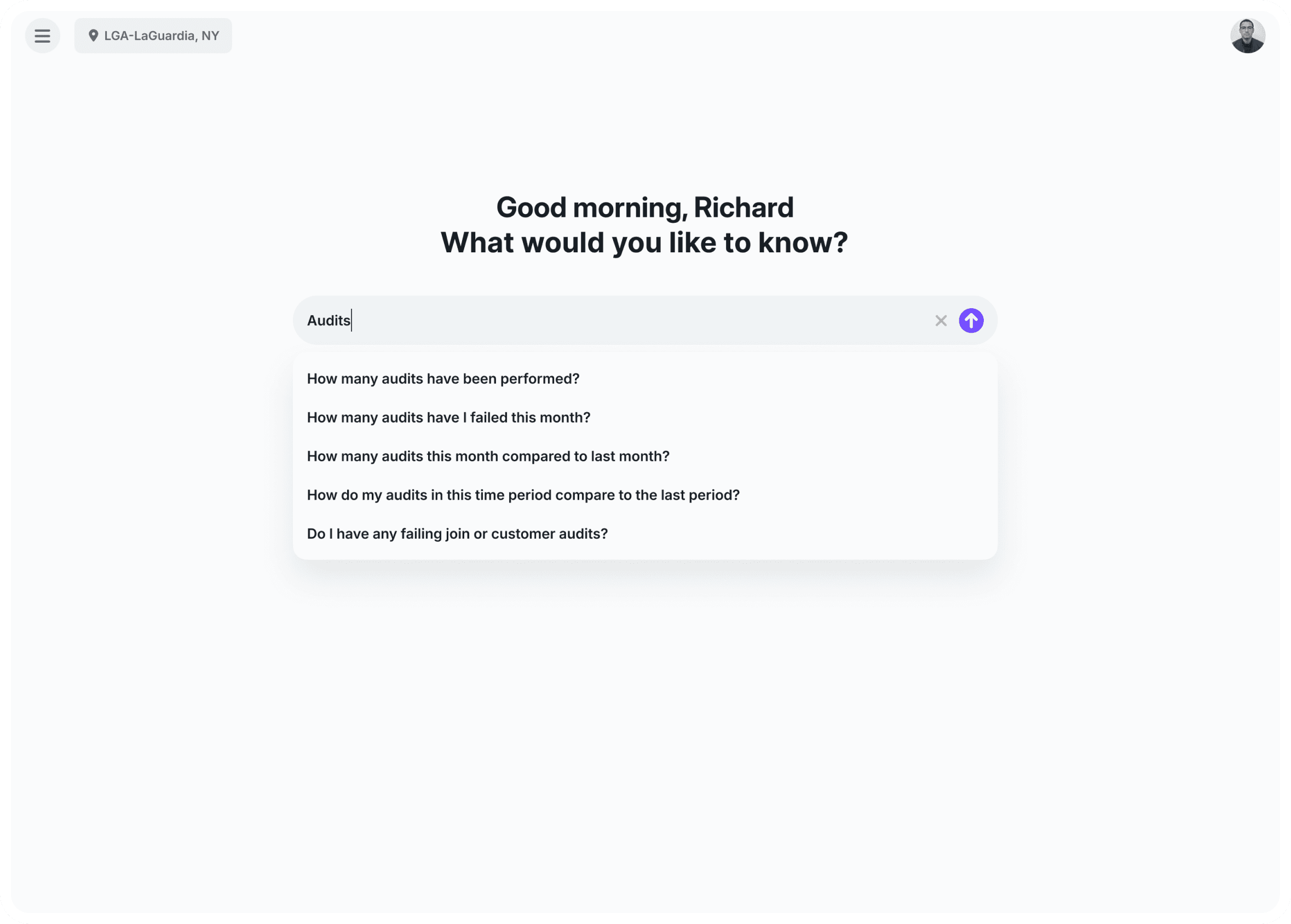

Build an AI-powered chat interface

Allow managers to ask questions and receive guidance directly, reducing dependence on complex dashboards and manual analysis.

Reduce time to insight and issue resolution

Enable managers to identify problems faster and move from signal to action more quickly across quality, people, and financial workflows.

Define OLIVAI’s standalone product identity

Establish a brand and design library for OLIVAI as a standalone product to support future contracts and scale across AI-driven features.

What We Built

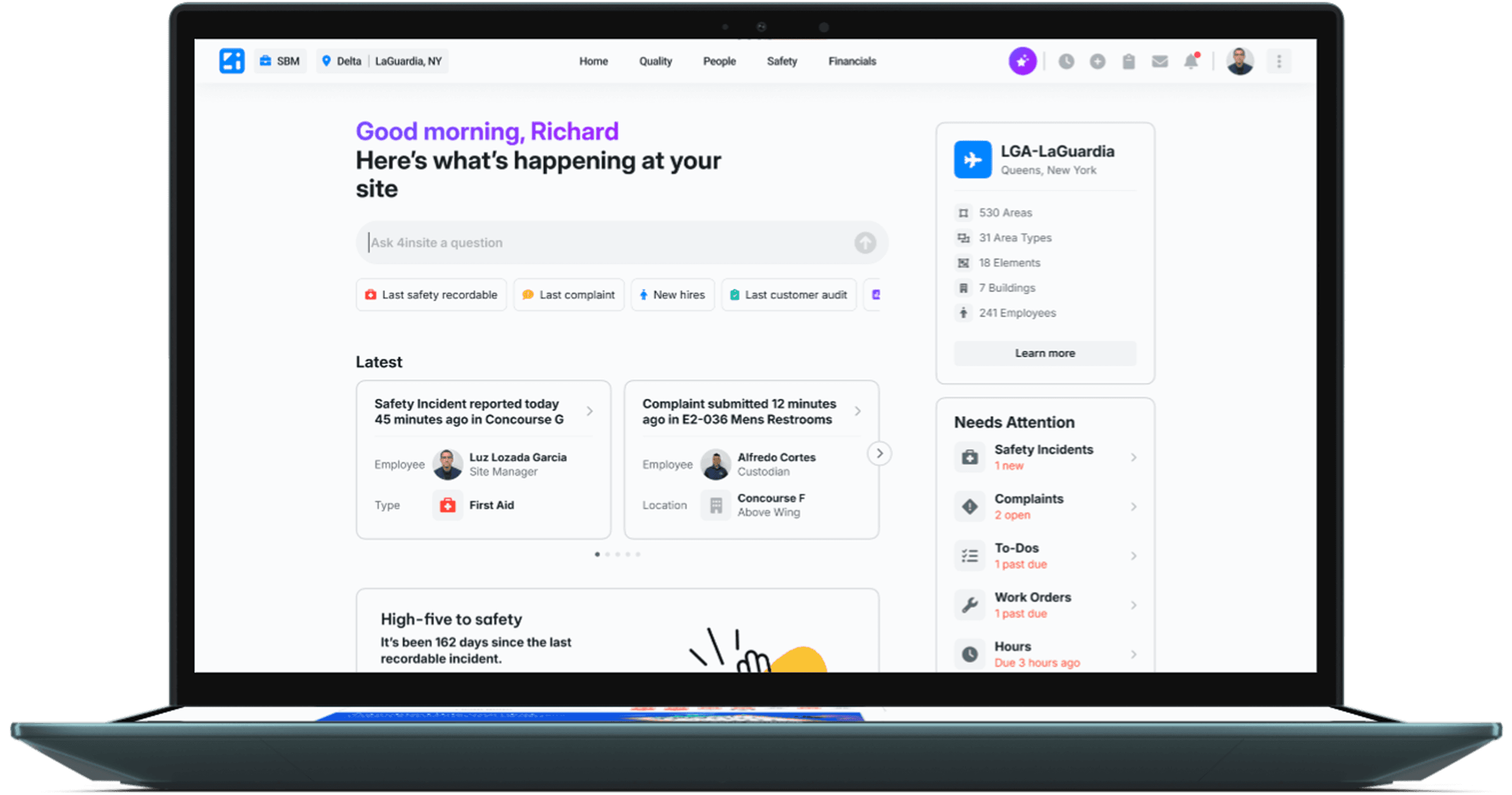

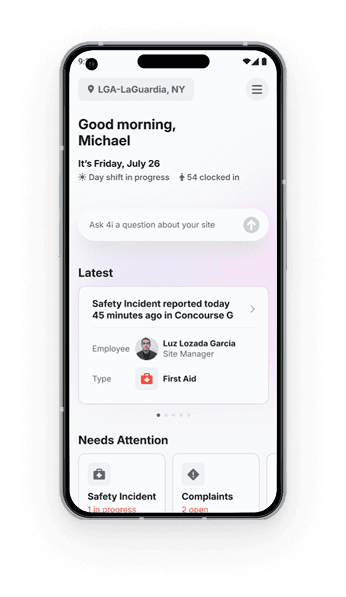

A role-aware, interactive AI experience

Combined conversational interaction with pre-structured stories, allowing managers to engage with AI guidance without relying on open-ended chat or manual query formulation.

A decision-driven structure for operational signals

Redesigned how data was grouped, prioritized, and presented to align managers' mental model, bridging the gap between raw metrics and actionable decisions.

The OLIVAI design library for AI-driven experiences

Established a reusable set of patterns, components, and interaction models to standardize how AI guidance, confidence cues, and actions are delivered across OLIVAI features.

The Outcome

Site Pulse created a new structure for operational signals, delivering 4× more insights per session, and became the blueprint for how data and AI initiatives are designed across the organization.

You're about to enter the realm of details. Below is the detailed process that led to the end product.

The CEO's Vision

Build an AI-powered chatbot that interprets data, recommends actions, and measures results — in 90 days

The vision was explicit: managers should be able to get answers and direction through chat alone — without charts, dashboards, or manual analysis. I facilitated early alignment with leadership and partners to clarify what success would mean for this vision, and we agreed on two initial OKRs: reducing time to insight and shortening time from issue identification to resolution.

While engineering assessed technical feasibility, I explored how a chat-based experience could function in real workflows

My goal was to expand the logic early—using interaction patterns and framing to help engineers reason about scope, constraints, and system behavior before implementation.

The Alignment Workshop

I needed clarity before going deep, so I led a cross-functional workshop to align on constraints, risks, and what success could realistically look like

Technical feasibility

Query limitations: Engineering could only support ~100 predefined question-and-answer flows within 90 days

Infrastructure Debt: The underlying data was inconsistently tagged across modules, making full automation and true natural-language querying infeasible in the short term

Execution risks

Would predefined Q&A actually cover the moments where managers needed to make decisions?

Do managers need visual data confirmation or will text answers suffice?

Would users adopt conversational search — or default to familiar navigation?

The Execution Model

To meet the 90-day mandate, I designed an execution model that enabled parallel progress without dependency bottlenecks

Instead of sequential handoffs, I structured the work around fast learning loops. Directional research set early constraints, engineering pressure-tested feasibility in parallel, and deeper validation refined assumptions as concepts evolved.

Design explored continuously across the loop, allowing us to adjust before committing to build.

The Findings

While early patterns suggested chat could help, real usage and environment constraints told a different story

User behavior aligned with our constraints: the vast majority of managers relied on the same core data points. Their mental model (Trigger → Scenario → Decision) also fit within the limits of a free-form chat interface.

However, the real-world environment challenged that direction. Managers worked in noisy, time-pressured settings. They entered sessions with a trigger and needed clear next steps — fast.

In that context, unstructured chat slowed decisions rather than accelerating them.

The Stakeholders Facilitation

To move forward without creating a vision–versus–design standoff, I introduced three concepts designed across a spectrum of tradeoffs.

Each concept intentionally represented a different point on the spectrum—from vision-led to behavior-led. The goal was to shift the conversation from opinion to evidence by proposing comparative validation as the most effective next step for decision-making.

The Decision

The presentation reframed the decision, and leadership approved comparative validation to test adoption and engagement

The Validation Setup

I designed the experiment to compare concepts through decision behavior: speed, confidence, and friction

Unmoderated scenario modeling

Same scenario, different concepts.

Compared time to identify what, understand why, and choose a next action.

Q/A quality checks

Same questions, same data.

Compared accuracy, response speed, and confidence to act.

Qualitative cross-checks

Side-by-side session review.

Observed hesitation, backtracking, and completion loops.

The Findings

Structured stories consistently outperformed chat-first concepts in speed, confidence, and follow-through

Before being introduced to structured stories, 91% of users applied a trigger → scenario → action flow in chat, before being introduced to structured story cards.

Once pre-built stories appeared, 80% oriented immediately once pre-built stories appeared—without training.

Users spent more time on the story dashboard, but reached decisions faster.

Overall, this model produced faster decisions with less hesitation than the other concepts.

The Concept I Pitched

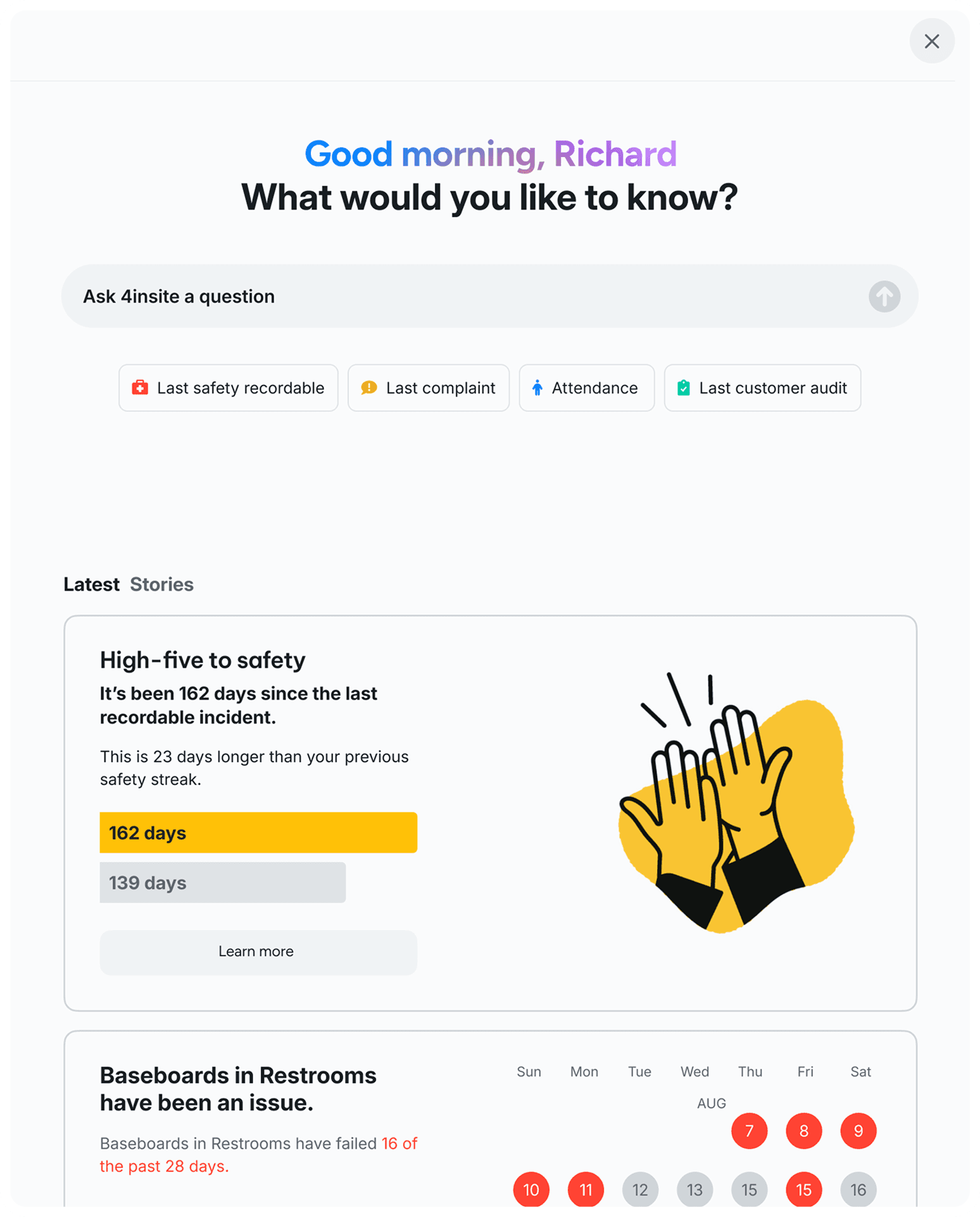

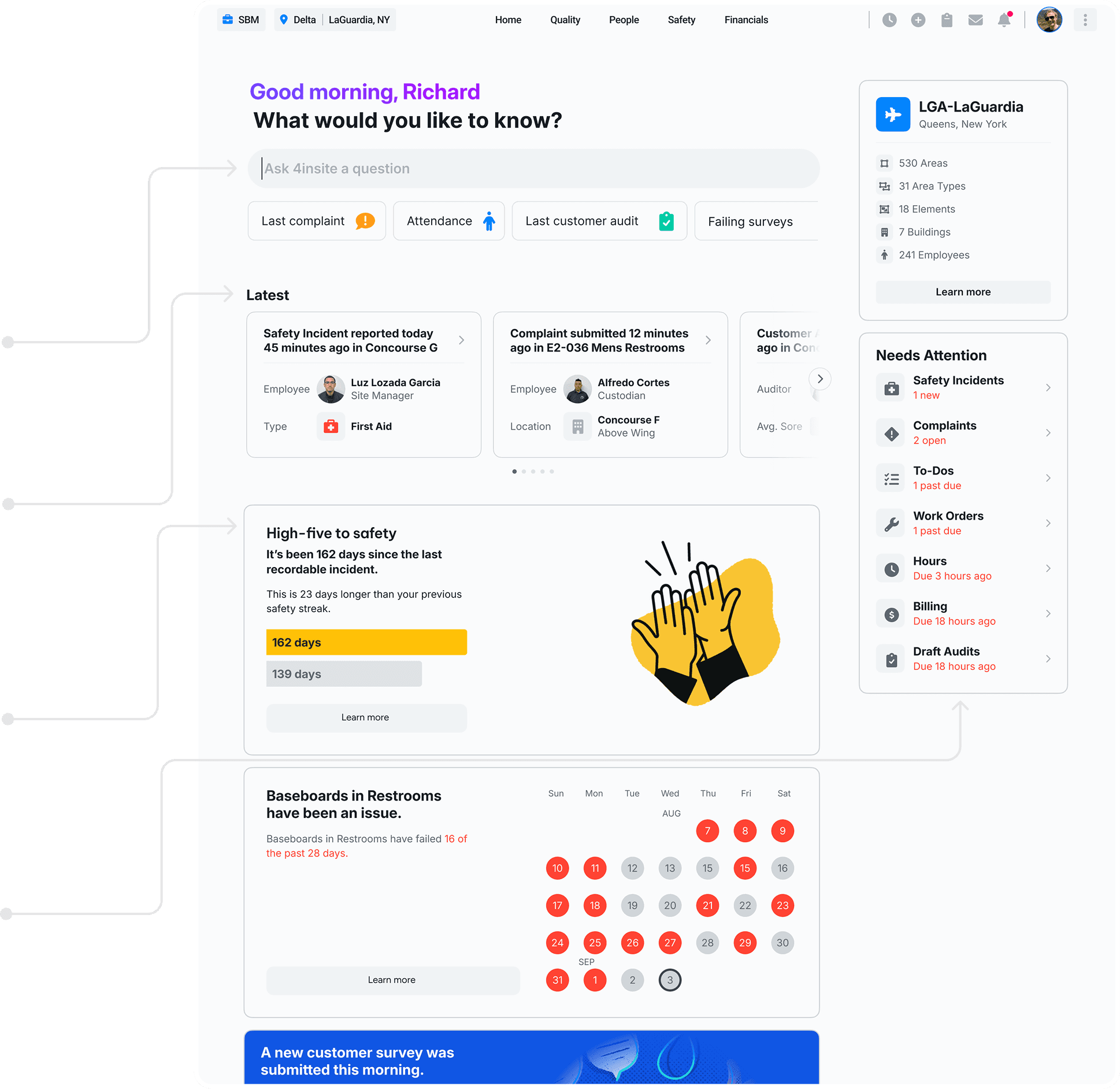

I evolved the strongest direction into a hybrid experience combining chat with structured decision support

Chat remains the entry point. As users scroll, it collapses into pre-built story cards that surface context, signals, and next actions—matching how decisions actually unfold without forcing a mode switch.

The Solution Breakdown

The story dashboard translated the CEO’s vision into a workflow that solved real user problems

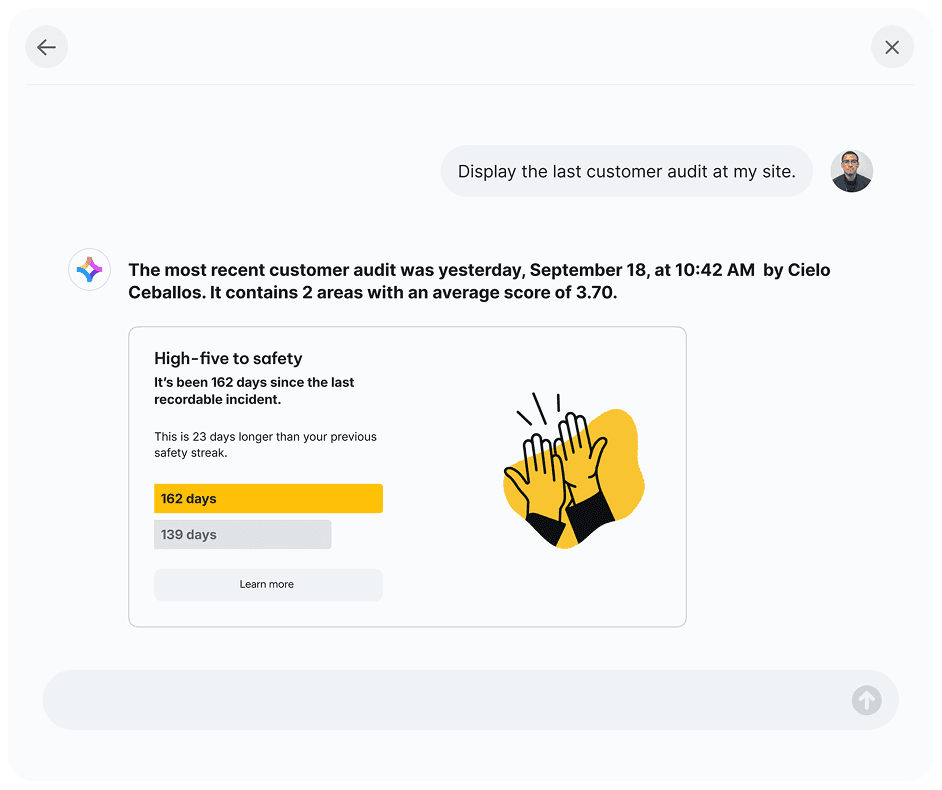

Chat is the entry point

Embedded for constant access in the premade stories dashboard

Latest activity

A real-time snapshot of site—built for quick orientation.

Structured stories

Narrative summaries aligned to the user’s mental model—trigger → scenario → action.

Needs attention

Benchmarked CTAs tied to role and site state—replacing “what should I do?” with a clear next action.

The Design System Strategy

The CEO asked for a new product identity. I delivered it by evolving the system, not rebuilding it.

With a 90-day timeline and a live legacy platform, a full rebuild wasn’t realistic. Instead, I extended 4insite’s existing foundation and introduced a new pattern layer—allowing OLIVAI to feel distinct without fragmenting the ecosystem.

The Impact

Site Pulse helped managers act faster by turning fragmented data into clear decision paths

By consolidating signals across modules, Site Pulse reduced decision ambiguity and helped managers quickly understand what mattered, why it mattered, and what to do next — without deep analysis or manual synthesis.

Impact metrics tracked during pilot rollout

The Organizational Impact

Site Pulse changed how the organization thinks about data and AI

The Reflection

Great design emerges at the intersection of people, constraints, and trust

Looking back, the work clearly showed that our strongest decisions came from embracing real constraints, reshaping information around how managers reason through cause and consequence, and treating AI support as a way to guide action and build confidence — even before full automation was viable.

This study discusses the highlights of this project

Get in touch for more details